I can tell you all the benefits of good functional testing processes. The ROI, the faster releases, the reduced personnel cost. But what is often not talked about is the cost of bad functional testing processes. Why? Usually because it’s hidden.

When we talk about testing, we talk about the suite of tests being run, but rarely talk about how they are created, or run. But also because the people running the tests are the same ones who implemented them, and no one thinks their baby is ugly. Especially if you are too busy running regression tests for a release candidate, while preparing tests for the next release and overlooking the tests of the current one.

When I talk about bad functional testing, I’m not referring to failed tests and how to address them. I’m referring to poorly implemented tests and testing processes. The test implementation and maintenance process is one of the largest time spends for a QA team, but not many organizations deliberate about how it is done.

This is not the QA’s fault, it’s the fault of the tools they have to use.

One of the biggest challenges of most functional test automation tools is that you have to know/predict a lot of things in advance. Especially nowadays, with continuous deployment, continuous delivery and the need for speed, the QA team has features flying at them rapidly, sometimes every day, it’s hard to know what new tests should include or not. So this fortune-teller skill is highly prone to human error.

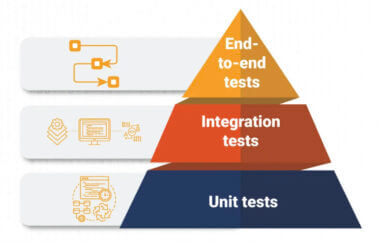

Even though the typical test automation tool is packed with ways to streamline the test runs, they often do not do a lot to help with better test implementation or better feature inclusion. This, in turn, leads most teams to standardize on certain critical functionality. This process results in:

- Ignored functionality that never gets tested

- Feature tests that are tested post-release

- Legacy tests testing old non-existent functionality

- Test sprawl: tests that no one knows what they are testing, and consume a lot of time during test runs

All of these lead to bad testing processes, but they also could potentially lead to needlessly failed tests. For example: a legacy test, which tests functionality that no-longer exists, could throw an error due to its interaction with a new feature. And it’s possible that this could cost a whole test suite re-run. We all know how long it can take to run a test. And unless you have a dedicated test lab (which you really should have), you run your tests at night, one failure could mean a whole wasted next day.

These testing tools are very useful and are a must for testing not only new features but also user flow and interaction between features and cases. However, here is another approach that allows you to forget about test implementation, and only focus on the pages to test.

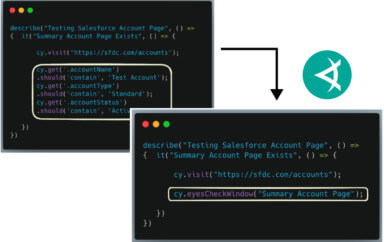

An innovative approach worth considering is to leverage visual testing. Visual testing, which captures screenshots of every page and compares them to a baseline, allows testers to quickly identify issues without having to implement a perfect test for every new piece of functionality. Your Selenium scripts to run a visual test are as simple as loading a page and capturing it, and can be created or updated in seconds.

When combined with the traditional functional test, this allows you to focus the elaborate tests on user flow and action/response functionality, and leave the new feature and look & feel testing to the visual testing tool. Because most releases contain small changes in app functionality, this means much less time is spent on creating the elaborate tests, which results in another huge benefit: all this freed-up time will allow you to increase the quality of those tests you do create.

Fewer tests, fewer errors, fewer wasted night runs, fewer bugs, faster releases.

ROI for functional testing is simple. But the hidden human error of poorly-written and run tests can eat that ROI quickly. Essentially in a bi-weekly release cycle, if you have 3 or more failed tests, then you have wasted more time then would have been gained by reducing the release cycle to once a month.

Ideally, you hit the run button just after a release, and the test is magically run. This is not the case, but visual testing gets you much closer. Visual testing augments your testing suite with very little effort, and reduces human error, and maximizes the mindshare and time of your QA team.

To read more about Applitools’ visual UI testing and Application Visual Management (AVM) solutions, check out the resources section on the Applitools website. To get started with Applitools, request a demo or sign up for a free Applitools account.