Traditional software products render essentially the same on any device. Microsoft Office, for example, looks the same on every personal computer. Imagine taking Microsoft Word exactly as it runs on your desktop, and viewing it on a iPhone4. Either the menus and buttons will appear tiny, or else you’ll only see a corner of the screen, and need to use extensive scrollbars. Either way, the application becomes essential unusable.

This frustrating experience is exactly what every designer goes through when they try to design for the web.

The fix for the problem is something called “responsive design”, a technique to have web-pages ask the browser what the resolution is, then gracefully re-design the user experience based on the available screen real estate. Suddenly, it is impossible to know exactly how your software will look in production.

That means a test strategy (and an automation strategy) that needs to be capable of experimenting and learning what “looks right”, and what doesn’t, at various resolutions.

Dynamic Test Strategy

Moving from 320×480 (the resolution of the iPhone4) to 2048×2048 (a large monitor) leaves over 4 Million possible browser sizes. Most test groups will narrow the list of test devices down to a handful. Even then, the manual testing problem is hard or impossible to approach. Developers cannot possibly anticipate all of the platform problems, and testers can’t catch them before release. Because of this, we find the occasional user interface issue in production. Maybe someone reduced the size of their browser causing important text fields to be covered by a page label. Perhaps some code designed to handle dynamic page resizing breaks modal date pickers and never gets noticed by a normal test built on WebDriver. There are too many display options to build tests for, and too little time.

Let’s take a look at a realistic example to illustrate the problem.

Dynamic pages, things like advertising sliders, and content streamed in from users in different page sizes, are a staple of many software products. Add to this the fact that we can’t predict how the page will be displayed and many automation efforts start with failure.

I see two popular solutions for this problem — using a standardized, or baseline, data set and refreshing that every time the test suite is run, and taking things one environment or platform at a time. Standard data ensures page content will look the same every time we load the test environment. That strategy combined with something like Sauce Labs that gives people access to many platforms and you get pretty far.

This approach takes time and resources. You will need time from someone with database access, usually a DBA, to create and update database exports. And, someone has to create scripts setup and teardown scripts to maintain the test environment. After all this effort, you might end up with the type of sanitized environment bugs love to hide in.

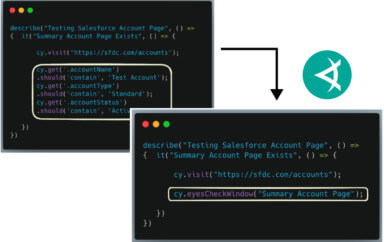

Alternately, you could use Visual Testing technology to help automate tests on web pages that vary in layout and content. Using this tooling, you can create tests without any changes to your test environment, and without requiring any skill sets from people outside of your test group.

Let’s look at an example.

Consider the Twitter mobile app.

This product is a combination of continuously changing user content and advertising. There are also a few core parts of the user interface, such as the news feed and notifications, in the header. Using a visual testing tool, you could start by performing a screen capture of the entire scrollable page, not just the viewable area. You could choose a comparison option that ignores text content, but focuses on page elements.

For example, you could see that the fields for tweets exist, that each tweet has a name element and a date/time element, without worrying about what is in the elements.

Searching for elements across full pages also relieves the maintenance and complexity burden we see in many automated tests. Rather than manipulating data on a page, saving, scrolling and then verifying, you get everything in one shot. This means less code to write, less code to maintain, and fewer false positives in the nightly test runs.

Responsive Testing for Responsive Design

Responsive design has added the combinatorial problem to every available platform. The question is: out of all of these possible platforms and screen sizes, which do we select for the best test coverage.

Reducing the number of environments we cover to only the most popular ones makes the technical task easier while also ignoring the coverage problem.

Increasing the number of environments with an automation framework alone creates a maintenance nightmare and potentially adds an unsolvable testing problem.

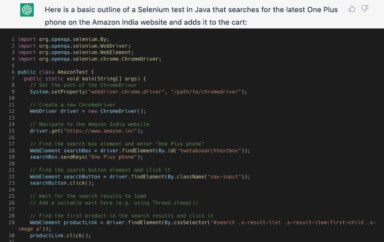

The combination of good visual testing tools with a flexible UI automation framework, such as webdriver, can make the technical aspects of this problem not just easier to deal with, but solvable.

The target is good user interface coverage with a reasonable maintenance burden. Visual testing is your only robust and scalable option.

— HAPPY TESTING —

To learn more about Applitools’ visual UI testing and application visual management (AVM) solutions, check out the tutorials on the Applitools website. To get started with Applitools, request a demo, or sign up for a free Applitools account.

About the author:

Justin Rohrman has been a professional software tester in various capacities since 2005. In his current role, Justin is a consulting software tester and writer working with Excelon Development. Outside of work, he is currently serving on the Association for Software Testing Board of Directors as President, helping to facilitate and develop projects like BBST, WHOSE, and the annual conference CAST.