At an industry event recently I had the opportunity to listen to a keynote by Janet Gregory, co-author of “Agile Testing” and “More Agile Testing”. During the talk, Janet asked the audience to pair up. One person stood facing the stage and projected slides, the other to face away. The person facing the slides was to tell their partner what they saw. They had 5 minutes to describe in every detail what was going on in the picture. The person facing away was told to visualize it. To try to remember and visualize every detail spoken to them.

When the clock ticked down and the five minutes were up. Janet asked the folks facing away from the image if they thought they had a good visualization of the image. Of course they did, they’d just heard 5 minutes worth of words detailing it.

Then she asked everyone to look at the image.

Eyes widened. Silence. Then, conversation erupted. Laughing out of interest and epiphany scattered its way across the auditorium. “Was this what you envisioned?” Janet asked.

This exercise was powerful. We each knew that our partners had done everything in their power to detail the image to us with words, but on turning around and seeing the image they relayed, we realized that no matter what our partners had said, there was nothing they could have done better — and yet the image was such a far cry from what we’d envisioned! The gap between visual information and other forms is enormous. Visual information is powerful.

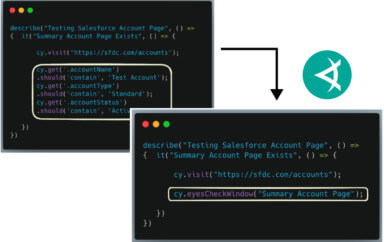

Diffs

While it’s been one of the most productive & efficient practices I use, I can’t tell you the number of hours I’ve invested in looking through diffs trying to discover root causes. And I can’t tell you how many of those hours I’ve reaped in return. A good visual diff tool enables fast, accurate, & timely feedback — a crucial aspect of application testing.

Diffs are powerful.

There are only two things that can cause tests to fail:

- The test code has a defect

- The test code has detected a change

The second item in the list above (detecting a change) can be broken down into these categories:

- The app code changed

- Something in the SUT’s environment changed (including date/time)

- A resource the SUT or test cases interact with changed

As we walk through the 4 ways diffs can help in test automation, notice how these tips identify which of the issue above is the culprit of a failing test. These 4 ways focus around the most time-intensive process of most test automation efforts: understanding why automated tests fail.

1) Check Myself

I check code I wrote first. Then the team’s test automation code. There are few things worse than being 100% convinced your code is working properly just to have someone else prove you wrong.

I like to use the diffs in changesets provided by source code control as a diagnostic tool. For many test automation engineers these days, this is some version of git (GitHub, GitLab, BitBucket, etc.) Most of these now have a visual diff tool built in. Far gone are the days of the command line “diff” (although it’s still available if you’re in a pinch). A good diff tool shows what you’ve changed in a visual fashion. With a glance, you may be able to determine whether a change you made affected the outcome of the test.

Check your own changes first. Build credibility in the test suite by ensuring your code works.

2) Check Test Execution History

A clear, visual history of a test cases’ executions is essential. Has this test failed often? (I hope you don’t have test cases that fail often — if so, call me). Is there a pattern to when this test or suite fails? What clues can you gather by the history?

What types of tests failed during the latest run? Is it a functional group? Is the test automation code new? Is the application code new?

Ask questions like these to figure out why there is a difference in test runs.

3) Check The Environment

Is the environment causing the unexpected result?

Does the test case pass on your local machine? Change the target environment, does it pass there?

If you can’t easily change the environment you’re running tests on it’s likely a major oversight in your architecture. Avoid the “it passes on my machine” issue by finding out if your machine is the only one it doesn’t pass on.

Check whether the failure happens with the same code (test code and application code) on different machines before you reach out for help. If there is a failure in one place, why? Think: “what is different between the two environments?” Here are a few things that could be different in environments:

- Test Code

- Deployed application code

- Deployment/orchestration code

- Configuration

- Other interacting services

- Data

- Date/Time settings

- Changes in Date and Time

- Hardware

- Virtual environments

- Other infrastructure

- Users

- User management

- Security

- Virtual or Mocked services or components

- Other processes using shared resources

When all else fails, one of my favorite debugging techniques is thinking through “if I wanted to create this situation, what would I do?” Is there a way to change your environment to be like the place it failed? (I’m not suggesting changing the environment, just suggesting asking the question to learn about the differences in the environment in order to identify what the source of a problem could be).

Consider time as part of the environment. Did this fail because of when the test ran? What could be happening in the failed environment during the time the test ran?

In orchestrated environments, we can look at config as code and use diffs to determine if the environment may have changed. Use the repository for the configuration code to see if provisioning of environments could have caused issues.

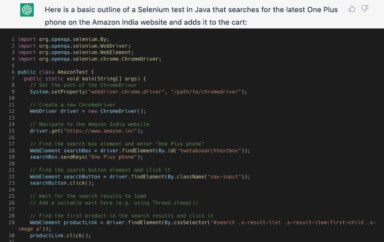

4) The Code Under Test

Finally, did the code under test change? Diffs can help with this too. Your CI environment should be able to tell you which code was deployed immediately prior to your test run. A good CI system and set up will allow you to easily see which changesets from development made it into a given deployment.

Take a look the diffs from development. Is there anything obvious that could have caused what you’re looking at? If not, at least the changesets since the last time this test case passed can help you identify who was working on code deployed to the system at the time it failed.

To reiterate, this is my process for learning about failed automated tests:

- check test automation code

- check execution history

- check environment

- check code under test

Each of these I do with diff tools. A good visual diff tool can tell you what’s going on quickly — a powerful application testing tool. Studies show the human brain can process visual imagery as fast as 13 milliseconds. That’s much faster than typing “what changed?” into Slack. It’s considerably faster than weaving through the psychological barricades embedded in a conversation with your teammates.

Speed Matters

Whether you know it or not, your company is now involved in the digital asset race. Your company’s competitors are working to engage customers more deeply and faster. That means they have to deliver software faster. When we can identify differences in expectations of software faster and more accurately than our competitors, we accelerate our digital vehicle.

Good visual diffs are a major accelerator in the digital asset race.

To learn more about Applitools’ visual UI testing and application visual management (AVM) solutions, check out the tutorials on the Applitools website. To get started with Applitools, request a demo, or sign up for a free Applitools account.

About the Author:

Paul Merrill is principal software engineer in test and founder of Beaufort Fairmont Automated Testing Services. Paul works with customers every day to accelerate testing, reduce risk, and increase the value of testing processes with test automation. An entrepreneur, tester, and software engineer, Paul has a unique perspective on launching and maintaining quality products. Check out his webinars on the company website, and follow him on twitter: @dpaulmerrill.