Every month I send a check to health insurance companies. That payment covers teeth cleanings, preventative checkups and immunizations, and potentially expensive emergency problems. Every month, I hope nothing happens that gives me a reason to cash out on the plan. And for the most part, that is what happens, nothing. Not having insurance is unthinkable despite the monthly expense.

I want to talk about what you get for a monthly investment in visual testing, and why you won’t want to be without that service.

Regression and Release

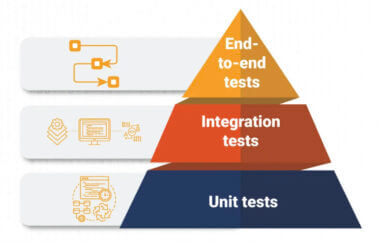

There is one remaining question at the end of every sprint that should be answered before we can push software to production: did we break anything important? Layers of automation — unit tests, API and service layer tests, and tests to drive the user interface — are built in addition to the actual product to help answer this question. The automation projects I have worked on began with the same two goals: To help discover regression problems earlier in the release cycle, and shorten the release cycle.

In addition to finding problems with basic functionality, the style of test automation also helps maintain code quality. If a developer forgets to check for null in their code, or an API no longer accepts a number with 5 digits after the decimal, an automated test will probably fail. No unit, API, or even classic GUI test will fail though if a few text fields are hidden when the browser is resized to simulate the viewable area of a mobile device, though.

Assuming a product with 20 unique web pages, in 5 web browsers, and 10 mobile platforms leaves 1,000 (pages X browsers X platforms = base coverage) different web pages to check for every release. If testing these is trivial, your average tester needs only 3 minutes per page assuming they don’t have to stop because of a bug, then testing each page on each device will take 3,000 minutes, or fifty hours. That is just too long. When done by hand, we usually cover a sample and hope that we tested the right things. If we didn’t test the right things, customers report bugs and the development has to stop working on the next sprint to write a fix.

Visual testing strategies don’t eliminate the time needed to cover each of those 20 web pages, 5 browsers, and 10 mobile platforms. What was normally a haphazard process discovering visual problems can be done intentionally with each build by using visual testing tools. The tester’s role changes a little when using this strategy. After each build, someone would be responsible for checking a visual report in Jenkins. This report would contain a list of the pages, and specific elements on the pages, that have changed from the baseline. The job of the tester, is to review these changes, make judgments about whether the differences are important, and to update the baseline image if the change is appropriate.

Instead of 1,000 click-throughs and switching of devices, the 50 hours run in parallel at night. In the morning the tester comes in and, during their morning coffee, reviews the 10 screen shots that are significantly different, only three of which are bugs.

Real savings in time can be found through an intentional visual testing strategy. One company found that they were able to discover 20% more bugs in the user interface before production by developing a visual testing strategy. Another company found that they were able to get covered in 5 hours what normally took around 70 before using an automated strategy.

Automated Coverage

The average automated user interface test is a complicated checklist. I write code that instructs the browser to navigate to a few pages — log in, navigate to search, navigate to edit product. After that I submit some data to the server and check that the data displayed on the page is what I was expecting. More complicated tests tend to fail for reasons other than a software bug, so UI tests are generally very simple. We don’t want to cross too many screens, and stick with well worn paths. More complicated tests usually require more complicated code.

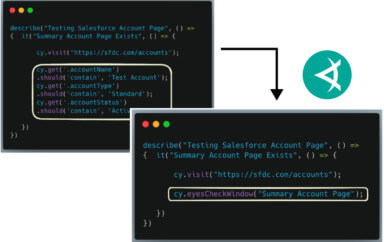

Adding a visual testing strategy can reduce test code and increase coverage at the same time. One of the dirty secrets of UI automation, is that we have to stop and ask the page if it is ready to do some work after every navigation, submit, or AJAX call.

We also have to make assertions, such as ‘does this field contain the integer 5.00’ to learn about the software. This spells code; to successfully interact with a page, I need to first ask the object if it exists and is visible and then check values on the page.

Visual testing tools can reduce some of our dependency on polling for objects and making assertions on a page. After navigating to a page, a visual testing library could do a comparison between the layout and content of the current view and the baseline image. This can be performed for each page, and pop-up an automated test encounters. In the end, we have more powerful tests, and less test code.

This level of visual testing was not possible a few years ago because of how the image comparisons were being performed. Modern visual testing tools provide a variety of comparison and review that make tests more stable and less prone to false positives.

Ideally, you end up with a more stable test, simpler test code, and purposeful coverage of the visual parts of your software. We also gain the ability to discover problems outside of the small assertions most tests are built with.

Return on Investment

The ROI of visual testing can be calculated in the ways that it extends our ability to test software — noticing problems, increasing coverage, and making test automation work more efficiently. People get a sort of blindness after looking at the same thing for periods of time. Visual testing continues to notice changes that would be missed by a person or code regardless of how long it has been running.

To read more about Applitools’ visual UI testing and Application Visual Management (AVM) solutions, check out the resources section on the Applitools website. To get started with Applitools, request a demo or sign up for a free Applitools account.

Post written by Justin Rohrman:

Justin has been a professional software tester in various capacities since 2005. In his current role, Justin is a consulting software tester and writer working with Excelon Development. Outside of work, he is currently serving on the Association for Software Testing Board of Directors as President, helping to facilitate and develop projects like BBST, WHOSE, and the annual conference CAST.