In February 2020, we were fortunate enough to have Erica Stanley present at the Applitools Days in Atlanta, GA. She spoke about virtual reality, augmented reality, and mixed reality and gave some examples of next-generation mixed reality.

When you create new technology, you think “fail fast”. Why should an R&D team prioritize quality?

In February 2020, we were fortunate enough to have Erica Stanley present at the Applitools Days in Atlanta, GA. Erica is a senior engineering manager on the Emerging Tech, Mixed Reality team at Mozilla. Within the Mixed Reality (MR) team, Erica’s team focuses on MR for desktop & Android and has found a creative way to implement test automation with the help of Applitools, Cypress, OpenCV, and FFMPEG.

Writing this in the midst of the pandemic and looking back to February, so much has changed. Watching her presentation, I could not help but think of the potential such technology might have in a world of social distancing. Imagine being able to shop from home while interacting with the product. Or, you might attend a virtual reality meeting in a shared room with your colleagues. These are just a few examples that Erica talks about in her presentation.

Erica focuses on quality engineering on a team that focuses on innovation. If you think that quality engineering and innovation cannot coexist, Erica explains why they must work together, as well as how she helps her team use quality tools to aid in their innovation process.

Three Realities

Let’s begin with an overview of terminology:

- Virtual Reality (VR) – A fully immersive experience where a user leaves the real-world environment behind to enter a fully digital environment via VR headsets.

- Augmented Reality (AR) – An experience where virtual objects are superimposed onto the real-world environment via smartphones, tablets, heads-up displays, or AR glasses. (Think Pokemon Go game or the IKEA furniture app.)

- Mixed Reality (MR) – A step beyond augmented reality where the virtual objects placed in the real world can be interacted with and respond as if they were real objects.

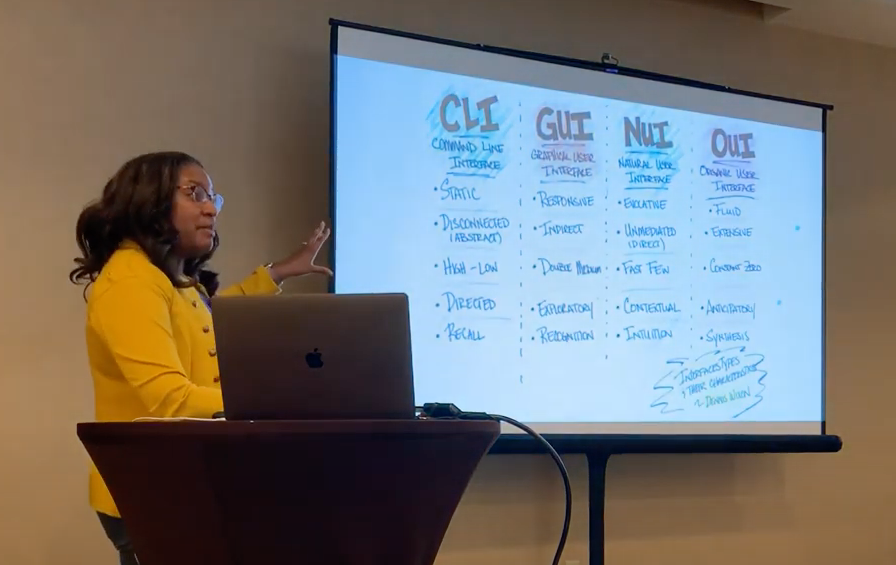

Four Interfaces

Next, Erica described the different kinds of virtual reality interface terms. If virtual reality provides an extension of human-machine interaction, let’s start at the earliest and move up.

- CLI – Command Line Interface – not necessarily what we think of as virtual reality, but still at the core of every computing system.

- GUI – Graphical User Interface – graphical based, interactive, feedback. Whether using desktop monitors or virtual reality glasses, we still seem to use this interface today.

- NUI – Natural User Interface – you don’t need instructions – it performs naturally. Erica gave the example of being a painter. Her experience of digital painting on the iPad – brush strokes, paint color mixing, etc. – seems natural from her experience as a painter.

- OUI – Organic User Interface – Interface is almost seamless with your reality – the environment learns from you and changes with you.

Examples of Next Generation Mixed Reality

Erica gave some interesting examples of potential real-world applications of mixed reality. These included entertainment, collaboration, and social engagement activities, where MR might provide unique value. Such as:

- Immersive Movies – imagine walking around in a 3D environment and interacting with the movie? Would this mean being in a movie theater or being in the movie itself? What movie would you “drop” into? Or would it be more like a virtual Black Mirror: Bandersnatch – where you interact with the movie to determine the outcome?

- Corporate Meetings – This would bring working from home to the next level – with everyone being “together” in a meeting room. There is nothing like being in-person, but to have a way to share a virtual whiteboard and be in the same virtual room could bring a new level of focus to remote meetings – I am looking forward to this application of MR.

- Civic engagement – how about envisioning planning commission proposals in a real-world walk-around in your neighborhood? Or being able to share visualization of education data results during a school board meeting?

A Focus on Quality: Yes – Even for Research Product Delivery

Some people think of quality as a cost of doing business. Those people think of quality as a way to reduce support and rework costs. If you share that mindset, you probably wonder how quality engineering fits into an R&D lab.

As Erica explains, her team works with partners who are their customers. The partners expect working products just as any customer would. Her team also works with user communities who, in turn, build on top of her team’s code. Each iteration includes new features, enhancements, and bug fixes. Because of all the downstream dependencies, any bugs, misbehaviors, or unexpected behavior changes can cause those downstream teams’ huge issues. To remain a trusted supplier and partner in this R&D world, Erica helped her team prioritize and deliver quality as a core component of the build process.

Quality challenges in Mixed Reality Applications?

Erica and her team knew to value quality for their ecosystem. The question remained: how would her team be able to deliver a quality product? What tools could help her team deploy and test a cutting-edge technology. She realized that she and her team needed to innovate with her solution. At the same time, she needed to import best-practices from across the software development practices.

So, what are some of the challenges that Erica’s team faced in testing mixed reality applications?

- Lack of specialized software for virtual reality testing. Testers must build their own test approaches and test fixturing. So, few people are building AR/VR/MR yet alone running automated tests on such applications. Teams need to figure it out on their own.

- Teams must design their tests to account for both 2D and 3D interactions.

- Teams face an incredibly fragmented device market. Headsets, eyewear, and tactile interfaces have their own specifications and behavior. As in the early days of Android smartphones – everyone complies with and extends beyond the existing specification.

With all these technical challenges, the biggest hurdle Erica and team faced was not technical. Her focus on quality ran headlong into the existing culture of ‘fail fast’. How did she convince research engineers to build in automated testing? It came down to convincing them that quality is a way to deepen and speed up the learning from the research itself.

Erica went on to discuss how she fostered a learning culture including psychological safety, implementation of standards, and deep collaboration between developers and the QA team.

Running Test Automation on Mixed Reality

The team at Mozilla implemented an entire test automation suite for testing their code builds. A large component of that automation involves visual testing, delivered via Applitools. Some of the benefits they received by visual testing AR, VR and MR applications include:

- Avoiding manual testing without the headset. Getting a user into the headset takes time. With visual testing, you can test in the browser.

- Visualization fosters collaboration between Developers and QA team. The team collaborates on what is correct in the baseline images and discuss changes as a team – a great way to speed up research learnings.

- Addressing device fragmentation – you can turn on / off certain snapshots depending on the device under test helping you deal with all the devices in a concise manner.

While the variety of scenarios require a variety of tools, the one consistent was Applitools. Below are the three scenarios that the team at Mozilla has implemented:

- 2D with Applitools & Cypress – to test navigation, essentially, automating the testing of the chrome around the browser.

- 3D on Android with Applitools & OpenCV – This combination helps their team understand where objects are in space. If an object includes another object – you can isolate the object and focus solely on the object under test.

- 360 Video with Applitools & FFMPEG – This enables test frames in 360 video to be isolated visual validated.

What’s Next

Wrapping up, Erica spoke about what happens beyond visual interactions and how this can be tested. One of the challenges up next: How do you automate the test of a controller that is supposed to vibrate with an interaction or test for voice interactions?

AR, VR, and MR comprise a set of fast-evolving user experience technologies. Erica and her team sit among the lead group of quality engineers facing down the challenges that these technologies present.

You can follow Erica on Twitter @ericastanley.

Watch the Full Video

This blog post was previously posted on Jaxenter on July 1, 2020.