Autonomous testing – where automated tools figure out what to test for us – is going to be the next great wave in software quality. In this article, we’ll dive deeply into what autonomous testing is, how it will work, and what our workflow as a tester will look like with autonomous tools. Although we don’t have truly autonomous testing tools available yet, like self-driving cars, they’re coming soon. Let’s get ready for them!

What is autonomous testing?

So, what exactly is autonomous testing? It’s the next evolutionary step in efficiency.

A brief step back in time

Let’s take a step back in time. Historically, all testing was done manually. Humans needed to poke and prod software products themselves to check if they worked properly. Teams of testers would fill repositories with test case procedures and then grind through them en masse during release cycles. Testing moved at the speed of humans: it got done when it got done.

Then, as an industry, we began to automate our tests. Many of the tests we ran were rote and repetitive, so we decided to make machines run them for us. We scripted our tests in languages like Java, JavaScript, and Python. We developed a plethora of tools to interact with products, like Selenium for web browsers and Postman for APIs. Eventually, we executed tests as part of Continuous Integration systems so that we could get fast feedback perpetually.

With automation, things were great… mostly. We still needed to take time to develop the tests, but we could run them whenever we wanted. We could also run them in parallel to speed up turnaround time. Suites that took days to complete manually could be completed in hours, if not minutes, with automation.

Unfortunately, test automation is hard. Test automation is full-blown software development. Testers needed to become developers to do it right. Flakiness became a huge pain point. Sometimes, tests missed obvious problems that humans would easily catch, like missing buttons or poor styling. Many teams tried to automate their tests and simply failed because the bar was too high.

What we want is the speed and helpfulness of automation without the challenges in developing it. It would be great if a tool could look at an app, figure out its behaviors, and just go test it. That’s essentially what autonomous testing will do.

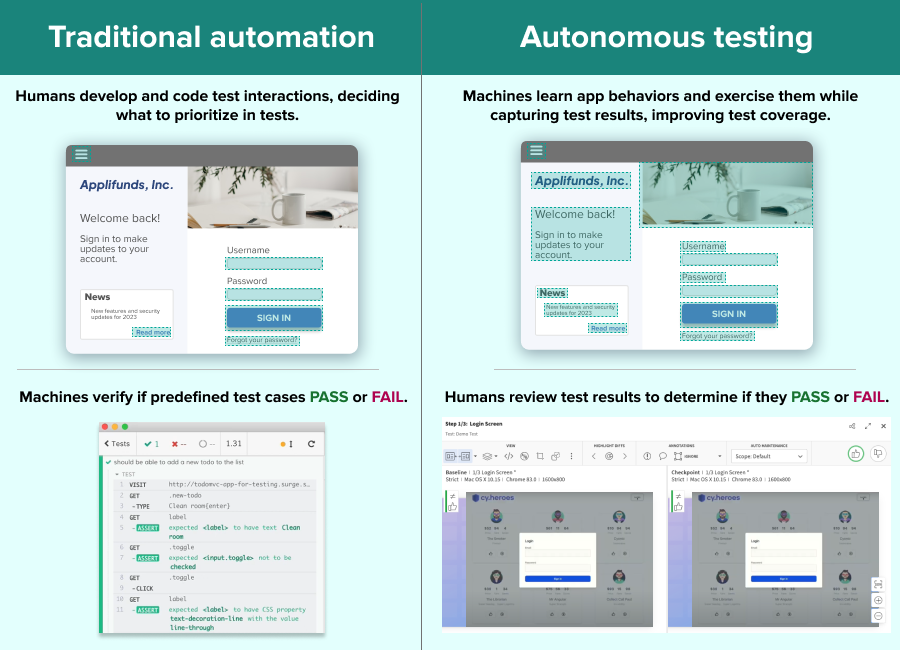

Ironically, traditional test automation isn’t fully automated. It still needs human testers to write the steps. Autonomous testing tools will truly be automated because the tool will figure out the test steps for us.

The car analogy

Cars are a great analogy for understanding the differences between manual, automated, and autonomous testing.

Manual testing is like a car with a manual transmission. As the driver, you need to mash the clutch and shift through gears to make the car move. You essentially become one with the vehicle.

Many classic cars, like this vintage Volkswagen Beetle, relied on manual transmissions. Beetle gear shifters were four-on-the-floor with a push-down lockout for reverse.

Automated testing is like a car with an automatic transmission. The car still needs to shift gears, but the transmission does it automatically for you based on how fast the car is going. As the driver, you still need to pay attention to the road, but driving is easier because you have one less concern. You could even put the car on cruise control!

Autonomous testing is like a self-driving car. Now, all you need to do is plug in the destination and let the car take you there. It’s a big step forward, and it enables you, now as a passenger, to focus on other things. Like with self-driving cars, we haven’t quite achieved full autonomous testing yet. It’s still a technology we hope to build in the very near future.

How will it work?

This probably goes without saying, but autonomous testing tools will need to leverage artificial intelligence and machine learning in order to learn an app’s context well enough to test it. For example, if we want to test a mobile app, then at the bare minimum, a tool needs to learn how phones work. It needs to know how to recognize different kinds of elements on a screen. It needs to know that buttons require tapping while input fields require text. Those kinds of things are universal for all mobile apps. At a higher level, it needs to figure out workflows for the app, like how humans would use it. Certain things like search bars and shopping carts may be the same in different apps, but domain specific workflows will be unique. Each business has its own special sauce.

This means that autonomous testing tools won’t be ready to use “out of the box.” Their learning models will come with general training on how apps typically work, but then they’ll need to do more training and learning on the apps they are targeted to test. For example, if I want my tool to test the Uber app, then the tool should already know how to check fields and navigate maps, but it will need to spend time learning how ridesharing works. Autonomous tools will, in a sense, need to learn how to learn. And there are three ways this kind of learning could happen.

Random trial and error

The first way is random trial and error. This is machine learning’s brute force approach. The tool could literally just hammer the app, trying to find all possible paths – whether or not they make sense. This approach would take the most time and yield the poorest quality of results, but it could get the job done. It’s like a Roomba, bonking around your bedroom until it vacuums the whole carpet.

Coaching from a user

The second way for the tool to learn an app is coaching from a user. Instead of attempting to be completely self-reliant, a tool could watch a user do a few basic workflows. Then, it could use what it learned to extend those workflows and find new behaviors. Another way this could work would be for the tool to provide a recommendation system. The tool could try to find behaviors worth testing, suggest those behaviors to the human tester, and the human tester could accept or reject those suggestions. The tool could then learn from that feedback: accepted tests signal good directions, while rejected tests could be avoided in the future.

Essentially, the tool would become a centaur: humans and AI working together and learning from each other. The human lends expertise to the AI to guide its learning, while the AI provides suggestions that go deeper than the human can see at a glance. Both become stronger through symbiosis.

Learning from observability data

A third way for an autonomous testing tool to learn app context acts like a centaur on steroids: learning from observability data. Observability refers to all the data gathered about an app’s real-time operations. It includes aspects like logging, system performance, and events. Essentially, if done right, observability can capture all the behaviors that users exercise in the app. An autonomous testing tool could learn all those behaviors very quickly by plunging the data – probably much faster than watching users one at a time.

What will a workflow look like?

So, let’s say we have an autonomous testing tool that has successfully learned our app. How do we, as developers and testers, use this tool as part of our jobs? What would our day-to-day workflows look like? How would things be different? Here’s what I predict.

Setting baseline behaviors

When a team adopts an autonomous testing tool for their app, the tool will go through that learning phase for the app. It will spend some time playing with the app and learning from users to figure out how it works. Then, it can report these behaviors to the team as suggestions for testing, and the team can pick which of those tests to keep and which to skip. That set then becomes a rough “baseline” for test coverage. The tool will then set itself to run those tests as appropriate, such as part of CI. If it knows what steps to take to exercise the target behaviors, then it can put together scripts for those interactions. Under the hood, it could use tools like Selenium or Appium.

Detecting changes in behaviors

Meanwhile, developers keep on developing. Whenever developers make a code change, the tool can do a few things. First, it can run the automated tests it has. Second, it can go exploring for new behaviors. If it finds any differences, it can report them to the humans, who can decide if they are good or bad. For example, if a developer intentionally added a new page, then the change is probably good, and the team would want the testing tool to figure out its behaviors and add them to the suite. However, if one of the new behaviors yields an ugly error, then that change is probably bad, and the team could flag that as a failure. The tool could then automatically add a test checking against that error, and the developers could start working on a fix.

Defining new roles

In this sense, the autonomous testing tool automates the test automation. It fundamentally changes how we think of test automation. With traditional automation, humans own the responsibility of figuring out interactions, while machines own the responsibility of making verifications. Humans need to develop the tests and code them. The machines then grind out PASS or FAIL. With autonomous testing, those roles switch. The machines figure out interactions by learning app behaviors and exercising them, while humans review those results to determine if they were desirable (PASS) or undesirable (FAIL). Automation is no longer a collection of rote procedures but a sophisticated change detection tool.

What can we do today?

Although full-blown autonomous testing is not yet possible, we can achieve semi-autonomous testing today with readily-available testing tools and a little bit of ingenuity. In the next article, we’ll learn how to build a test project using Playwright and Applitools Eyes that autonomously performs visual and some functional assertions on every page in a website’s sitemap file using Visual AI.

Applitools is working on a fully autonomous testing solution that will be available soon. Reach out to our team to see a demo and learn more!