How much is it worth to catch more bugs early in your product release process? Depending on where you are in your release process, you might be writing unit or systems tests. But, you need to run end-to-end tests to prove behavior, and quality engineers require a high degree of skill to write end-to-end tests successfully.

What would you say if a single validation engine could help you ensure data integrity, functional integrity, and graphical integrity in your web and mobile applications? And, as a result, catch more bugs earlier in your release process?

Catch Bugs or Die

Let’s start with the dirty truth: all software has bugs. Your desire to create bug-free code conflicts with the reality that you often lack the tools to uncover all the bugs until someone finds them way late in the product delivery process. Like, say, the customer.

With all the potential failure modes you design for – and then test against – you begin to realize that not all failure modes are created equal. You might even have your own triage list:

- Security & penetration

- Data integrity and consistency

- Functional integrity and consistency

So, where does graphical integrity and consistency fit on your list? For many of your peers, graphical integrity might not even show up on their list. They might consider graphical integrity as managing cosmetic issues. Not a big deal.

Lots of us don’t have reliable tools to validate graphical integrity. We rely on our initial unit tests, systems tests, and end-to-end tests to uncover graphical issues – and we think that they’re solved once they’re caught. Realistically, though, any application evolution process introduces changes that can introduce bugs – including graphical bugs. But, who has an automation system to do visual validation with a high degree of accuracy?

Tradeoffs In End-to-End Testing

Your web and mobile apps behave at several levels. The level that matters to your users, though, happens at the user interface on the browser or the device. Your server code, database code, and UI code turns into this representation of visual elements with some kind of visual cursor that moves across a plane (or keyboard equivalent) to settle on different elements. The end-to-end test exercises all the levels of your code, and you can use it to validate the integrity of your code.

So, why don’t people think to run more of these end-to-end tests? You know the answers.

First, end-to-end tests run more slowly. Page rendering takes time – your test code needs to manipulate the browser or your mobile app, execute an HTTP request, receive an HTTP response, and render the received HTML, CSS, and JavaScript. Even if you run tests in parallel, they’re slower than unit or system tests.

Second, it takes a lot of effort to write good end-to-end tests. Your tests must exercise the application properly. You develop data and logic pre-conditions for each test so it can be run independently of others. And, you build test automation.

Third, you need two kinds of automation. You need a controller that allows you to control your app by entering data and clicking buttons in the user interface. And, most importantly, you need a validation engine that can capture your output conditions and match those with the ones a user would expect.

You can choose among many controllers for browsers or mobile devices. Still, why do your peers still write code that effectively spot-checks the DOM? Why not use a visual validation engine that can catch more bugs?

Visual AI For Code Integrity

You have peers who continue to rely on coded assertions to spot-check the DOM. Then you have the 288 of your peers who did something different: they participated in the Applitools Visual AI Rockstar Hackathon. And they got to experience first-hand the value of Visual AI for building and maintaining end-to-end tests.

As I wrote previously, we gave participants five different test cases, asked them to write conventional tests for those cases, and then to write test cases using Applitools Visual AI. For each submission, we checked the conditions each test writer covered, as well as the failing output behaviors each test-writer caught.

As a refresher, we chose five cases that one might encounter in any application:

- Comparing two web pages

- Data-driven verification of a function

- Sorting a table

- Testing a bar chart

- Handling dynamic web content

For these test cases, we discovered that the typical engineer writing conventional tests to spot-check the DOM spent the bulk of their time writing assertion code. Unfortunately, the typical spot-check assertions missed failure modes. The typical submission got about 65% coverage. Alternatively, the engineers who wrote the tests that provided the highest coverage spent about 50% more time writing tests.

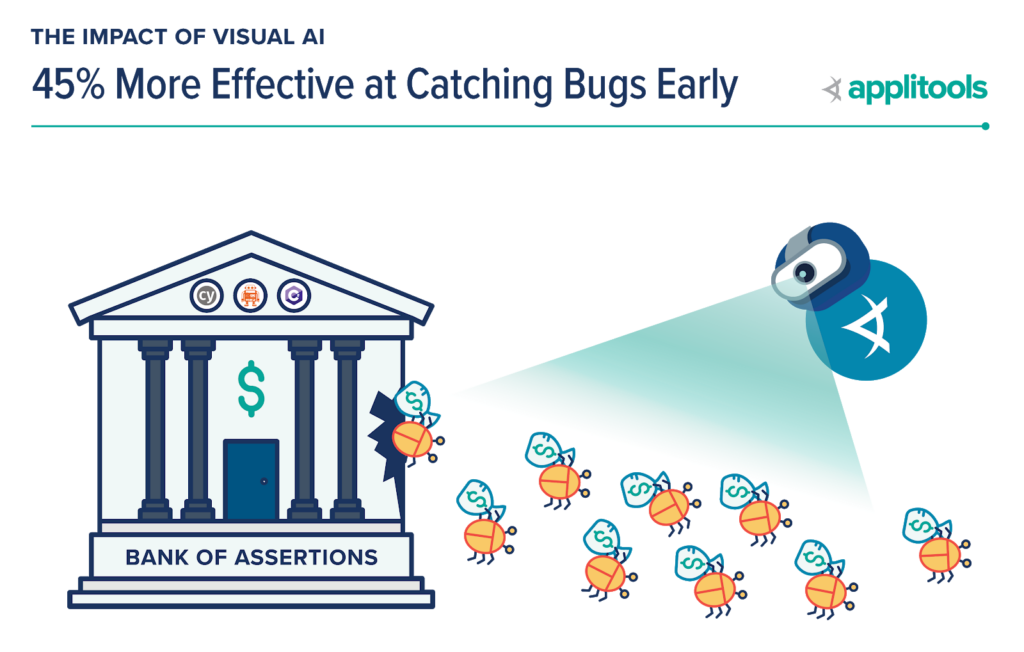

However, when using Visual AI for visual validation, two good things happened. First, everyone spent way less time writing test code. The typical engineer went from 7 hours of coding tests and assertions to about 1.2 hours of coding tests and Visual AI. Second, the average test coverage jumped from 65% to 95%. So, simultaneously, engineers took less time and got more coverage.

Visual AI Helps You Catch More Bugs

When you find more bugs, more quickly, with less effort, that’s significant to your quality engineering efforts. You’re able to validate data, functional, and graphical by focusing on the end-to-end test cases you run. You spend less time thinking about and maintaining all the assertion code checking the result of each test case.

Using Visual AI makes you more effective? How much more effective? Based on the data we reviewed – you catch 45% of your bugs earlier in your release process (and, importantly, before they reach customers).

We have previously written about some of the other benefits that engineers get when using Visual AI, including:

- 5.8x Faster Test Creation – Authoring new tests is vital especially for new features during a release cycle. Less time authoring means more time managing quality. Read more.

- 5.9x More Test Code Efficient – Like your team’s feature code, test code efficiency means you write less code, yet provide far more coverage. Sounds impossible, right? It’s not. Read More.

- 3.8x Improvement In Test Stability – Code-based frameworks rely on brittle locators and labels that break routinely. This maintenance kills your release velocity and reduces coverage. What you need is self-maintaining and self-healing code that eliminates most of the maintenance. It sounds amazing and it is! Read More.

By comparing and contrasting the top participants – the prize winners – with the average engineer who participated in the Hackathon, we learned how Visual AI helped the average engineer greatly – and the top engineers become much more efficient.

The bottom line with Visual AI — you will catch more bugs earlier than you do today.

More About The Applitools Visual AI Rockstar Hackathon

Applitools ran the Applitools Visual AI Rockstar Hackathon in November 2019. Any engineer could participate, and 3,000 did so from around the world. 288 people actually completed the Hackathon and submitted code. Their submissions became the basis for this article.

You can read the full report we wrote: The Impact of Visual AI on Test Automation.

In creating the report, we looked at three groups of quality engineers including:

- All 288 Submitters – This includes any quality engineer that successfully completed the hackathon project. While over 3,000 quality engineers signed-up to participate, this group of 288 people is the foundation for the report and amounted to 3,168 hours, or 80 weeks, or 1.5 years of quality engineering data.

- Top 100 Winners – To gather the data and engage the community, we created the Visual AI Rockstar Hackathon. The top 100 quality engineers who secured the highest point total for their ability to provide test coverage on all use cases and successfully catch potential bugs won over $40,000 in prizes.

- Grand Prize Winners – This group of 10 quality engineers scored the highest representing the gold standard of test automation effort.

By comparing and contrasting the time, effort, and effectiveness of these groups, we were able to draw some interesting conclusions about the value of Visual AI in speeding test-writing, increasing test coverage, increasing test code stability, and reducing test maintenance costs.

What’s Next?

You now know five of the core benefits we calculate from engineers who use Visual AI.

- Spend less time writing tests

- Write fewer lines of test code

- Maintain fewer lines of test code

- Your test code remains much more stable

- Catch more bugs

So, what’s stopping you from trying out Visual AI for your application delivery process? Applitools lets you set up a free Applitools account and start using Visual AI on your own. You can download the white paper and read about how Visual AI improved the efficiency of your peers. And, you can check out the Applitools tutorials to see how Applitools might help your preferred test framework and work with your favorite test programming language.

Cover Photo by michael podger on Unsplash