Mobile Testing is challenging. Mobile test automation – i.e. automating tests to run on mobile devices, is even more challenging. This is because is requires some added tools, libraries and infrastructure setup for you to be able to implement and run your automated tests on mobile devices.

This post will cover the various strategies and practices you should think about for your mobile test automation – including strategy, execution environment setup, automation practices and running your automated tests in the CI pipeline.

Lastly, there is also a link to a GitHub repository which can be used to start automation for any platform – Android, iOS, web, Windows. It also supports integration for Applitools Visual AI and can run against local devices as well as cloud-based device farms.

So, let’s get started. We will discuss the following topics:

- Test Designing

- Mobile Test Automation Strategy

- Automating the set up of the Mobile Test Execution Environment

- Mobile Test Automation Solution / Framework

- Visual Test Automation

- Instrumentation / Analytics Automation

- Running the Automated Tests

Test Designing

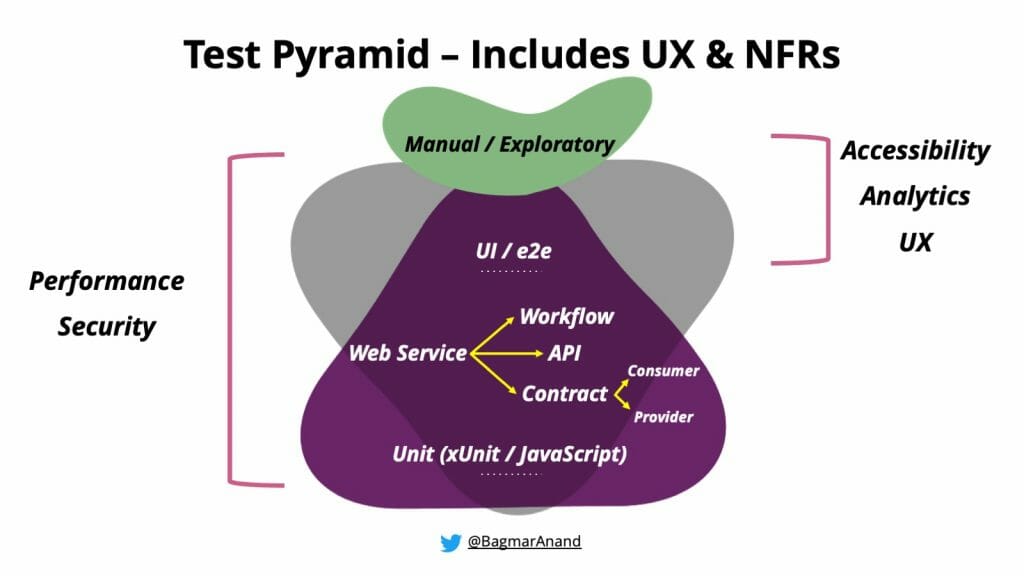

The Test Automation Pyramid is not just a myth or a concept. It actually helps teams shift left and get quick feedback about the quality of the product-under-test. This then allows humans to use their intelligence and skills to explore the product-under-test to find other issues which are not covered by automation.

To make test automation successful however, you need to consciously look at the intent of each identified test for automation, and check how low in the pyramid you can automate it.

A good automation strategy for the team would mean that you have identified the right layers of the pyramid (in context of the product and tech stack).

Also, an important thing to highlight is that each layer of the pyramid has a certain type of impact on the product-under-test. Refer to the gray-colored inverted pyramid in the background of the above image.

This means:

- Each unit test will test a very specific part of the product logic.

- Each UI / end-2-end test will impact the breadth of the product functionality.

In the context of this post, we will be focusing on the top layer of the Test Automation Pyramid – the UI / end-2-end Tests.

To make the web / mobile test automation successful, you need to identify the right types of tests to automate at the top-layer of the automation pyramid.

Mobile Test Automation Strategy

For mobile test automation, you need to have a strategy for how, when and where you need to run the automated tests.

Automated Test Execution Strategy

Based on your device strategy, you also need to think about how and where your tests will run.

If your product supports it, you would want the tests to run on browsers / emulators / devices. These could be on the local machine, some browser / device farm as part of manually triggered test execution and also as part of automatic triggers setup in your CI server.

In addition, you also need to think about fast feedback and coverage. For this there are different considerations – sequential execution, distributed execution and parallel (with distributed) execution.

- Sequential execution: All tests will run, in any order, but 1 at a time

- Distributed execution (across same type of devices):

- If you have ‘x’ devices of the same type available, all tests will run on either of the device available for running the test

- It is preferable to have the tests distributed across the same type of device to prevent device-specific false positives / negatives

- This will give you faster feedback

- Parallel execution (across different types of devices, ex: One Plus 6, One Plus 7):

- If you have ‘x’ types of devices available, all tests will run on each of the device types

- This will give you wider coverage

- Parallel with Distributed execution: (combination of Parallel and Distributed execution types)

- If you have ‘x’ types of devices available, and ‘y’ devices of each type: (ex: 3 One Plus 6, 2 One Plus 7)

- All tests will run on each device type – i.e. One Plus 6 & One Plus 7

- The tests will be distributed across the available One Plus 6 devices

- The tests will be distributed across the available One Plus 7 devices

- If you have ‘x’ types of devices available, and ‘y’ devices of each type: (ex: 3 One Plus 6, 2 One Plus 7)

Your Test Strategy needs to have a plan for achieving coverage. Analytics data can tell you what types of devices or OS or capabilities are important to run your tests against.

Device Testing Strategy

Each product has different needs and requirements of the device capabilities to function correctly. Based on the context of your product, look at how you can identify a Mobile Test Pyramid that suits you.

The Mobile Test Pyramid allows us to quickly start testing our product without the need for a lot of real devices in the initial stages. As the product moves across environments, you can progressively move to using emulators and real devices.

Some important aspects to keep in mind here is to identify:

- If you can use the browser for early testing

- Does your product work well (functionally and performance-wise) in emulators

- What type of real devices and how many do you need? Is there an OS version limitation, or specific capabilities that are required? Are there enough devices for all team members (especially since most of us work remotely these days)

In addition, do you plan to set up your real devices in a local lab setup or do you plan to use a device farm (on-premise or cloud based)? Either approach needs to be thought through, and the solution needs to be designed accordingly.

Automating the set up of the Mobile Test Execution Environment

Depending on your test automation tech stack, the setup can be daunting for people new to the same. Also, depending on the versions of the tools / libraries being used, there can be differences in the execution results.

To help new people start easily, and keep the test execution environment consistent, the setup should be automated.

In case you are using Appium from Linux or Mac OS machines, you can refer to this blog post for automatic setup of the same – https://applitools.com/blog/automatic-appium-setup/

Mobile Test Automation Solution / Framework

Your automation framework should have some basic criteria in place:

- Test should be easy to read and understand

- Framework design should allow for easy extensibility and scalability

- Tests should be independent – it will allow to run them in any sequence, and also allow them to be run in parallel with other tests

Refer to this post for more details on designing your automation framework.

The team needs to decide its criteria for automation, and its execution. Based on the identified criteria, the framework should be designed and implemented.

Test Data Management

Test Data is often an ignored aspect. You may design and plan for everything, but if you do not have a good test data strategy, then all the efforts can go waste, and you would end up with sub-optimal execution.

Here are some things to strive for:

- It is ideal if your tests can create the data it needs.

- If the above is not possible, then have seeded data that your tests can use. It is important to have sufficient data seeded in the environment, that will allow for test independence, and parallel execution

- Lastly, if test data cannot be created or seeded, have intelligence in your test implementation to “query” the data that each of your test needs, and use that intelligently and dynamically in your execution

OS Support

The tests should be able to be implemented and executed based on the OS the team members are using, and the OS available in the CI agents. So if your team members are on Windows, Linux and Mac OSX, then keep that in consideration when implementing the tests and its utilities ensuring it would work in all OS environments.

Platform Support

Typically the product-under-test would be available to the end-users in various different platforms. Ex: As an Android app distributed via Google Play Store, or as an iOS app distributed via Apple’s AppStore, or via the web.

Based on what platforms your product is available, your test framework should support all of them.

My approach for such multi-platform product-under-test is simple:

- Tests should be specified once, and should be able to run on any platform, determined by a simple environment variable / configuration option

To that effect, I have built an open-source automation framework, that supports the automation of web, Android, iOS, and Windows desktop applications. You can find that, with some sample tests here – https://github.com/znsio/unified-e2e. You can refer to the “Getting Started” section.

Reporting

Having good test reports automatically generated as part of your test execution would allow you the following:

- Know what happened during the execution

- If the test fails, it is easy to do root-cause analysis, without having to rerun the test, and hope the same failure is seen again

From a mobile test automation perspective, the following should be available in your test reports:

- For each test, the device details should be available – especially very valuable if you are running tests on multiple devices

- Device logs for the duration of the test execution should be part of the reports

- Clear the device logs before test execution starts, and once test completes, capture the same and attach in the reports

- Device performance details – battery, cpu, screen refresh / frozen frames, etc

- Relevant screenshots from test execution (the test framework should have this capability, and the implementer should use it as per each test context)

- Video recording of the executed test

- Ability to add tags / meaningful metadata to each test

- Failure analysis capability and ability to create team specific dashboards to understand the tests results

In addition, reports should be available in real time. One should not need to wait for all the tests to have finished execution to see the status of the tests.

Assertion Criteria

Since we are doing end-2-end test automation, the tests we are automating are scenarios / workflows. It is quite possible that as part of the execution, we encounter different types of inconsistencies in the product functionality. While some of the inconsistencies would mean there is no point proceeding with further execution of that specific scenario, there would be many cases where we can proceed with the execution. This is where using hard asserts Vs soft asserts can be very helpful.

Let’s take an example of automating a banking scenario – where the user logs in, then sees the account balance, and then transfers a portion of the balance to another account.

In this case, if the user is unable to login, there is no point proceeding with the rest of the validation. So this should be a hard-assertion.

However, let’s say the test logs in, but the balance is 5000 instead of 6000. Since our test implementation takes a portion of the available balance – say 10%, for transferring to another account, the check on the balance can be a soft-assertion.

When the test completes, it should then fail with the details of all the soft-assertion failures found in the execution.

This approach, which should be used very consciously, will allow you to get more value from your automation, instead of the test stopping at the first inconsistency it finds.

Visual Test Automation

Let’s take an example of validating a specific functionality in a virtual meeting platform. The scenario is: The host should be able to mute all participants.

Following are the steps to follow in a traditional automated test:

- Host starts a meeting

- More than ‘x’ participants join the meeting

- Host mutes all participants

- The assertion needs to be done for each of the participants to check if they are muted

Though feasible, Step 4 needs a significant amount of code to be written.

But what about a situation where if there is a bug in the product, while force muting, the video is also turned off for each participant? How would your traditionally automated test validate this?

A better way would be to use a combination of functional and Applitools’ AI powered visual testing in such a case, where the following would now be done:

- Host starts a meeting

- More than ‘x’ participants join the meeting

- Host mutes all participants

- Using Applitools visual assertion, you will now be able to check the functionality and the other existing issues, even though not directly validated / related to your test. This automatically increases your test coverage while reducing the amount of code to be written

In addition, you want to ensure that your app looks great, consistent and as expected on any device. So this is an easy to implement solution which can give you a lot of value in your quest for higher quality!

Instrumentation / Analytics Automation

One of the key ways to understand your product (web / app) usage by the end-user typically uses Analytics.

In the case of the web, if some analytics event capture is not working as expected, it is “relatively” easy for you to fix the problem, and do a quick release and you will be able to start seeing that data.

In the case of mobile apps though, once the app is released and the user has installed it, unless you release the app again (with the fix, of course), AND the user updates it, only then you will be able to see the data correctly. Hence you need to very carefully plan the release approach of your mobile apps. See this webinar by Justin and me on “Stop Testing (Only) The Functionality of Your Mobile Apps!” on different aspects of Mobile Testing and mobile test automation that one needs to think about and include in the Testing and Automation Strategy.

Coming back to analytics, it is easily possible, with some collaboration with the developers of the app, to validate the analytics events being sent as part of your mobile test automation. There are various approaches to this, but that is a separate topic for discussion.

Running the Automated Tests

The value of automation is to run the tests as often as we can on every change in the product-under-test. This will help identify issues as soon as they would be introduced in the product code.

It can also highlight that the tests need to be updated in case they are out of sync with expected functionality.

From a mobile test automation perspective, we need to do a few additional steps to make these automated tests run in a truly non-intrusive and fully automated manner.

Automated Artifact Generation, in Debug Mode

When automating tests for the web, you do not need to worry about an artifact being generated. When the product build completes, you can simply deploy the artifact(s) to an environment, update configuration(s), and your tests can run against it.

However for mobile test automation, the process would be different.

- You need to build the mobile app (apk / ipa) – and have it point to a specific environment

To clarify this – the apk / ipa would point to backend servers via APIs. You would need this configurable to point to your test environment Vs production environment.

- The capability to build capability to generate the artifacts for each type of environment (ex: dev, qa, pre-prod, prod) – from the same snapshot of the code is also needed.

- You would need to have this artifact being built in the debug mode to allow the automated tests to interact with it

Once the artifact is generated, it should automatically trigger the end-2-end tests.

Teams need to invest in artifacts with the above capabilities generated automatically. Ideally, these artifacts are generated for each change in the product code base – and subsequently tests should run automatically against it. This would allow us to easily identify what parts of the code caused the tests to fail – hence fix the problem very quickly.

Unfortunately, I have seen an antipattern in far too many teams – where the artifact is created from some developer machine (which may have unexpected code as well), and shared over email or some weird mechanism. If your team is doing this, stop it immediately, and invest in automating the mobile app generation via a CI pipeline.

Running the Tests Automated for Mobile as Part of CI Execution

To run the tests as part of CI needs a lot of thought from a mobile test automation perspective.

Things to think about:

- How are your CI agents configured? They should use the same automated script as discussed in the “Test Execution Environment” section

- Do you have access to the CI agents? Can you add real devices to those agents? There is a regular maintenance activity required for real devices and you would need access to the same.

- Do you have a Device Farm (on-premise or cloud-based)? You need to be able to control the device allocation and optimal usage

- How will you get the latest artifact from the build pipeline automatically and pass it to your framework. The framework then needs to clean up the device(s) and install this latest artifact automatically, before starting the test execution.

The mobile test automation framework should be fully configurable from the command line to allow:

- Local Vs CI-based execution

- Run against local or device-farm based execution

- Run a subset of tests, or full suite

- Run against any supported (and implemented-for) environment

- Run against any platform that your product is supported on – ex: android, iOS, web, etc.