Myopia means nearsightedness. If you write functional tests, you likely suffer from functional test myopia. You focus on your expected outcome and your test coverage. But you miss the big picture.

We all know the joke about one night with a guy on his knees under a streetlamp. As he’s fumbling around, a passerby notices him.

“Sir,” says the passerby, “What are you doing down there?”

“I’m looking for my wallet,” replies the guy.

“Where did you lose it?” asks the passerby.

“Down there, I think,” says the guy, pointing to a place down the darkened street.

“Why are you looking here, then?” wonders the passerby.

“Ah,” says the guy, “I can see here.”

Source: http://first-the-trousers.com/hello-world/

This is the case for functional testing of your web app. You test what you expect to see. But your functional tests can’t see beyond their code to the daylight of the rendered browser.

What Are Your Goals For Web App Testing?

Let me start by asking you – what goals do you set for your web app testing?

Have you ever wondered, if you’re building a web app, why do you bother using a browser to test the app? If you’re testing whether the app responds correctly to inputs and selectors, why not test using curl or a text-based browser like Browsh?

I’m being ironic. You know that the browser renders your app, and you want to make sure your app renders correctly.

But think about it: a text-based browser that converts web pages into something out of Minecraft will still pass a functional test — even if those pages are not rendered properly. That shows how limited traditional functional testing tools are.

I ask this question not to trip you up, but to help clarify your expectations.

In my experience, functional testers look for proper functionality for proper data input, handling data input exception cases, handling workflow exceptions, and handling server exceptions.

Whatever your goals, when you test your applications, you expect to see the application behave as expected when handling both normal and exception cases. You hope to cover as much of the app code as possible, and you want to validate that the app works as designed.

Why Functional Test Automation can fail

Functional testing myopia results from the code-based nature of functional testing. To drive web app activity, most engineers use appropriate technology, such as Selenium WebDriver. Selenium suits this need quite well. Other tools, like Cypress, can drive the application.

Similarly, a code-based tool evaluates the application response in HTML. TestNG, JUnit, Cypress, or some other tool inspects the DOM for an exact or relative locator that matches the coder’s intended response and validates that:

- The response text field exists

- The text in that field matches expectations

Potentially, a functional test might validate the entire DOM for the response page resulting from a given test action. Practically, only the expected response gets checked.

Just like the person on his or her knees under the streetlamp, functional testing validates the conditions the test can check. And, herein lies myopia. Even if the code could validate the entire DOM response, the code cannot validate the rendered page. Functional testing tests pre-rendered code.

The difference between functional test and user experience explains functional test myopia. Our best web browser automation code only checks for a tiny portion of page attributes and does not match the full visual experience that a human user has.

Alone, functional tests miss things like:

- Overlapping text

- Overlapping action buttons

- Action buttons colored the same as the surrounding text

- User regions or action areas too small for a user to see them

- Invisible or off-page HTML elements that a user wouldn’t see

How Do You Know Your App Renders Correctly?

Can you tell whether your application appears the way your customers expected or the way your designers intended? How do you validate app rendering?

Many app developers leaned on manual testing for validation purposes. One of my good friends has made a career as a manual QA tester. His job – run an app through its paces as a real user might, and validate the app behavior.

Manual testing can uncover visual bugs missed by functional test myopia. But manual testing suffers from three downsides:

- Speed – you might have several apps, with dozens of responsive pages to test on several screen sizes, with every daily build. Even if a tester can check a page thoroughly in a couple of minutes, those add up to delays in your release cycle.

- Evaluation Inconsistency – Manual testers can easily miss small issues that might affect users or indicate a problem

- Coverage Inconsistency – Test coverage by manual testers depends on their ability to follow steps and evaluate responses – which vary from tester to tester.

Test engineers who consider automation for rendered applications seek the automation equivalent of manual testers. They seek a tool that can inspect the visual output and make judgments on whether or not the rendered page matches expectations. They seek a visual testing strategy that can help speed the testing process and validate visual behavior through visual test automation.

Conversely, test engineers don’t want a bot that doesn’t care if web pages are rendered Minecraft-style by Browsh.

Source: https://pxhere.com/en/photo/536919

Legacy Visual Testing Technologies

Given the number of visual development tools available, one might think that visual testing tools match the development tools in scope and availability. Unfortunately, no. While one can develop a single app to run on mobile native and desktop browsers, from screen sizes ranging from five-inch LCD to 4K display, the two most commonly-used visual testing technologies remain pixel diffing and DOM diffing. And they both have issues.

DOM diff comparisons don’t actually compare the visual output. DOM diffs:

- Identify web page regions through the DOM.

- Can expose changes in the layout when the regions differ

- Indicate potential differences when a given region indicates a content or CSS change

- Remain blind to a range of visual changes, such as different underlying content with the same identifier.

Pixel diffing uses pixel comparisons to determine whether or not page content has changed. But pixel rendering can vary with the browser version, operating system, and graphics cards. Screen anti-aliasing settings and image rendering algorithms can result in pixel differences. While pixel diffs can identify valid visual differences they can also identify insignificant visual differences that a real user could not distinguish. As a result, pixel diffs suffer from false positives – reported differences that require you to investigate only to conclude they’re not different. In other words, a waste of time.

AI and visual Testing

Over the past decade, visual testing advanced from stand-alone tools to integrated visual testing tools. Some of these tools can be obtained through open source. Others are commercial tools that leverage new technologies.

The most promising approach uses computer vision technology – visual AI, the technology underlying Applitools Eyes – to distinguish visual changes between versions of a rendered page. Visual AI uses the same computer vision technology found in self-driving car development to replace pixel diffing.

Source: https://www.teslarati.com/wp-content/uploads/2017/07/Traffic-light-computer-vision-lvl5.jpg

However, instead of comparing pixels, Visual AI perceives distinct visual elements — buttons, text fields, etc. — and can compare their presentation as a human might see them. As a result, Visual AI more closely “sees” a web page with the same richness that a human user does. For this reason, Visual AI provides a much higher level of accuracy in test validation.

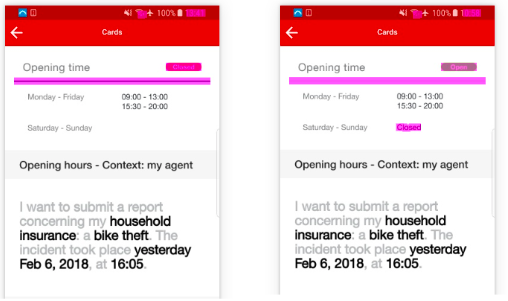

Here’s an example of Visual AI in action:

As a Visual AI tool, Applitools Eyes has been used by a large number of companies to add visual test automation to their web app testing. As a result, these companies have not only expanded beyond functional test myopia, but they now have the ability to rapidly accept or reject visual changes in their applications with visual validation workflow.

Broaden Your Vision

Whether you code web apps or write test automation code, you want to find and fix issues as early as possible. Visual validation tools let you bring visual testing into your development process so that you can automate behavior and rendering testing earlier in your development process. You can use visual validation to see what you’re doing and what your customers will experience.

Don’t get stuck in functional test myopia.

For More Information

Get a free Applitools account.

Request a demo of Applitools Eyes.

Check out the Applitools tutorials.